For a long time, NVIDIA’s CUDA has been the top choice for applications that require a lot of GPU power. This has made NVIDIA’s graphics cards the preferred option for tasks demanding high computing performance. AMD graphics cards were unable to support CUDA because it’s exclusive to NVIDIA’s hardware. However, there is now a way to run CUDA applications on AMD GPUs as well. This is thanks to initiatives like HIP, which converts CUDA code to be compatible with AMD’s ROCm architecture, and to third-party projects that enable CUDA applications to run on AMD hardware without changing the original code.

In addition to third-party solutions, AMD has been actively working on its own frameworks and tools to improve the performance of machine learning and high-performance computing on its GPUs. They provide alternatives, like Radeon Open Compute (ROCm) that support popular machine learning and deep learning frameworks. This means that users who have invested in AMD hardware now have more options and flexibility when it comes to parallel computing and GPU-accelerated tasks. Compatibility layers and conversion tools have started to level the playing field, making machine learning and scientific computing more accessible across different GPU platforms.

AMD’s Alternatives to NVIDIA CUDA

NVIDIA’s CUDA (Compute Unified Device Architecture) is a popular technology for parallel computing and GPU acceleration. It’s widely used in fields like machine learning, scientific computing, and video editing. AMD, NVIDIA’s major competitor in the GPU market, offers several alternatives to CUDA. Let’s explore them:

OpenCL (Open Computing Language)

OpenCL is a cross-platform, open-source framework for writing code that can run on CPUs, GPUs (from both AMD and NVIDIA), and other types of processors. It’s the closest equivalent to CUDA in terms of functionality but can sometimes be more complex to use.

HIP (Heterogeneous-compute Interface for Portability)

HIP is a programming platform and API designed to make it easier to port CUDA code to AMD GPUs. It provides a thin layer that translates CUDA-like syntax to equivalent code that can run on AMD hardware.

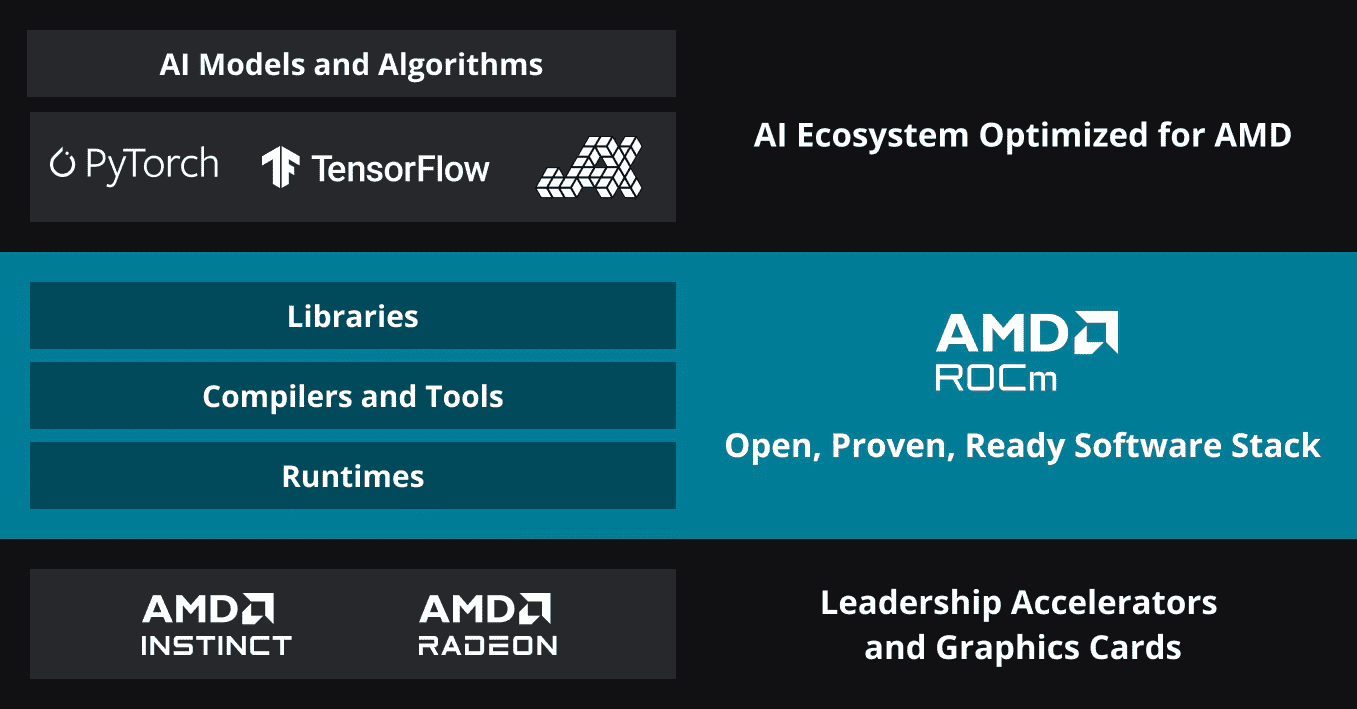

ROCm (Radeon Open Compute)

ROCm is a broader, open-source software platform by AMD that encompasses libraries, compilers, and tools for high-performance computing on AMD GPUs. It’s particularly relevant for machine learning and scientific applications

Which One to Choose?

Here’s a quick comparison to help you decide:

| Technology | Advantages | Disadvantages | Best For |

|---|---|---|---|

| OpenCL | Cross-platform compatibility, Open-source | Can be complex, performance optimization may be challenging | Broad range of applications, developers wanting full control |

| HIP | Easier porting of existing CUDA code | Limited to AMD GPUs, some CUDA features may not translate well | Developers moving CUDA projects to AMD |

| ROCm | Optimized for AMD hardware, strong focus on machine learning | Less mature than CUDA, primarily for AMD GPUs | Machine learning and scientific computing workloads |

Note: Software support and the ease of using these technologies continue to evolve. It’s best to assess what’s right for your specific project and the tools you’re familiar with.

Key Takeaways

- CUDA’s compatibility with AMD GPUs has expanded due to conversion tools and compatibility layers.

- AMD continues to develop its ROCm platform to support a range of computing tasks.

- The divide between NVIDIA and AMD GPUs in parallel computing is narrowing thanks to recent software advancements.

Comparative Analysis of GPU Architectures

Graphics Processing Units (GPUs) are the powerhouse for rendering images and accelerating computational tasks. This section looks at the structures different companies use to build their GPUs, such as AMD, Nvidia, and Intel, and how software like CUDA and OpenCL operate with these devices.

AMD Radeon vs Nvidia CUDA Core Architecture

AMD’s Radeon GPUs use an architecture that focuses on parallel processing capabilities. These GPUs contain a large number of stream processors grouped into Compute Units (CUs), which manage work in a vector-oriented manner. This means that several operations can be performed simultaneously with high efficiency. AMD has developed Radeon Open Compute (ROCm) as an open-source platform that provides libraries and tools for GPU computing.

In contrast, Nvidia’s CUDA cores are scalar processors organized within streaming multiprocessors (SMs). CUDA, which stands for Compute Unified Device Architecture, is a parallel computing platform and programming model invented by Nvidia that allows software developers to use a CUDA-enabled GPU for general purpose processing – an approach termed GPGPU (General-Purpose computing on Graphics Processing Units). Nvidia boasts an elaborate ecosystem with their CUDA Toolkit which includes a compiler, debuggers, and performance analysis tools designed specifically for their GPUs.

Open-Source Alternatives and Compatibility Layers

Open-source projects and compatibility layers have become crucial in bridging the gap between different GPU architectures and making development more accessible. OpenCL is a framework used to write programs that execute across various platforms, including AMD and Nvidia GPUs, as well as other processors. It’s a flexible alternative to CUDA, which is specific to Nvidia. AMD’s ROCm is another notable open-source project allowing cross-platform GPU computing, providing tools to convert CUDA code to portable HIP code with its HIPIFY tool.

Furthermore, projects like ZLUDA aim to provide CUDA compatibility on non-Nvidia GPUs, such as those from Intel. ZLUDA is a drop-in replacement for CUDA on machines that are equipped with Intel integrated GPUs. It translates CUDA calls into Intel graphics calls – effectively allowing programs written for Nvidia GPUs to run on Intel hardware.

In this landscape, DirectX 12 is also significant, especially in the gaming community, as it serves as a low-level, low-overhead API that supports both AMD and Nvidia GPUs and provides developers with more direct control of GPU acceleration for advanced visual effects and rendering techniques. Developers have a range of options to maximize the potential of GPU hardware, whether through proprietary or open-source platforms.

Development and Programming on Radeon GPUs

For developers aiming to harness the power of AMD Radeon GPUs, several tools and frameworks are pivotal. Comprehensive environments like ROCm for GPU computing, the HIP toolkit for cross-platform development, and extensive library support ensure developers have what they need for building sophisticated programs across various platforms.

Utilizing ROCm for GPU Computing

The ROCm, or Radeon Open Compute platform, is AMD’s open-source answer to high-performance, heterogeneous computing. It lets you program Radeon GPUs with languages like C++ for a variety of computing tasks such as AI and machine learning. ROCm also features essential libraries like BLAS and FFT for scientific computing needs. With Version 5, ROCm continues to expand its functionality and compatibility across Linux systems, ensuring developers have the tools and the library support they need for GPU computing.

Leveraging HIP for Cross-Platform Development

HIP, or Heterogeneous-compute Interface for Portability, enables developers to write code in a single-source manner that can run on both AMD and NVIDIA GPUs. By converting CUDA code to HIP code through the hipify tool, developers can make their applications portable and future-proof. The resulting HIP code can be compiled using hipcc, a compiler provided within the HIP toolkit, allowing programs to be more flexible across GPU platforms. This toolkit is accessible on platforms like GitHub, providing an open-source environment for developers to share and collaborate on their GPU computing projects.

Libraries and Framework Support in Radeon Ecosystem

The Radeon ecosystem’s support for libraries and frameworks is extensive, facilitating machine learning and scientific research. AMD’s support for popular frameworks like PyTorch and TensorFlow ensures that developers working on machine learning can utilize Radeon GPUs for their computations. The ROCm libraries, including hipBLAS and hipFFT, provide fundamental building blocks for custom applications requiring sophisticated mathematical computations. Additionally, libraries for handling sparse data structures are available, catering to specialized requirements in data science and machine learning fields.

Frequently Asked Questions

This section addresses common inquiries regarding AMD’s GPU technologies and software frameworks for parallel computing and how they interact with NVIDIA’s CUDA.

What is AMD’s alternative to CUDA for parallel computing?

AMD provides ROCm, or Radeon Open Compute platform, as their main software for parallel computing. It is a platform that enables developers to create high-performance applications that can run on AMD GPUs.

How does AMD ROCm compare to Nvidia CUDA?

ROCm is an open-source platform designed to run on AMD GPUs, whereas CUDA is a proprietary platform by NVIDIA tailored specifically for their GPUs. ROCm aims to provide similar capabilities for parallel processing as CUDA but focuses on fostering an open ecosystem.

Can PyTorch be used with AMD GPUs for machine learning applications?

Yes, PyTorch can be utilized on AMD GPUs. However, it typically involves additional steps such as using the HIP library to convert CUDA code to run on the ROCm platform, which is compatible with AMD GPUs.

What is the role of OpenCL in AMD’s GPU computing ecosystem?

OpenCL is a framework for writing programs that execute across varied platforms, including AMD GPUs. It is used widely in applications that require high-performance computing and is supported by ROCm for AMD’s GPU ecosystem.

Is it possible to use ROCm for applications originally written for CUDA?

It is possible to run applications written for CUDA on AMD GPUs using the ROCm stack. Projects like ZLUDA provide a compatibility layer, enabling the execution of CUDA binaries on AMD’s hardware without needing to modify the source code.

What are the capabilities of AMD’s GPUs in relation to CUDA cores?

The capabilities of AMD’s GPUs are often discussed in terms of “stream processors,” which are analogous to NVIDIA’s CUDA cores. These processors carry out the parallel computations and they are a key component in determining the performance capabilities of AMD GPUs for compute-intensive tasks.